We’ve all met people who act like know-it-alls. When given questions outside their own area of expertise, rather than accept the limits of their knowledge, they’ll invent plausible-sounding answers — and they may even come to believe their own nonsense. Whatever inspires such behavior, the problem of confidently incorrect answers polluting the world’s information space isn’t going away anytime soon.

Now, consider the implications of generative AI technology, especially large language models like ChatGPT. These tools represent a new kind of conversational partner—one that operates without the human faculties of common sense or self-awareness. As a result, while they can perform complex tasks and generate engaging dialogue, they also risk perpetuating and even increasing the spread of misinformation by fabricating responses or misinterpreting facts.

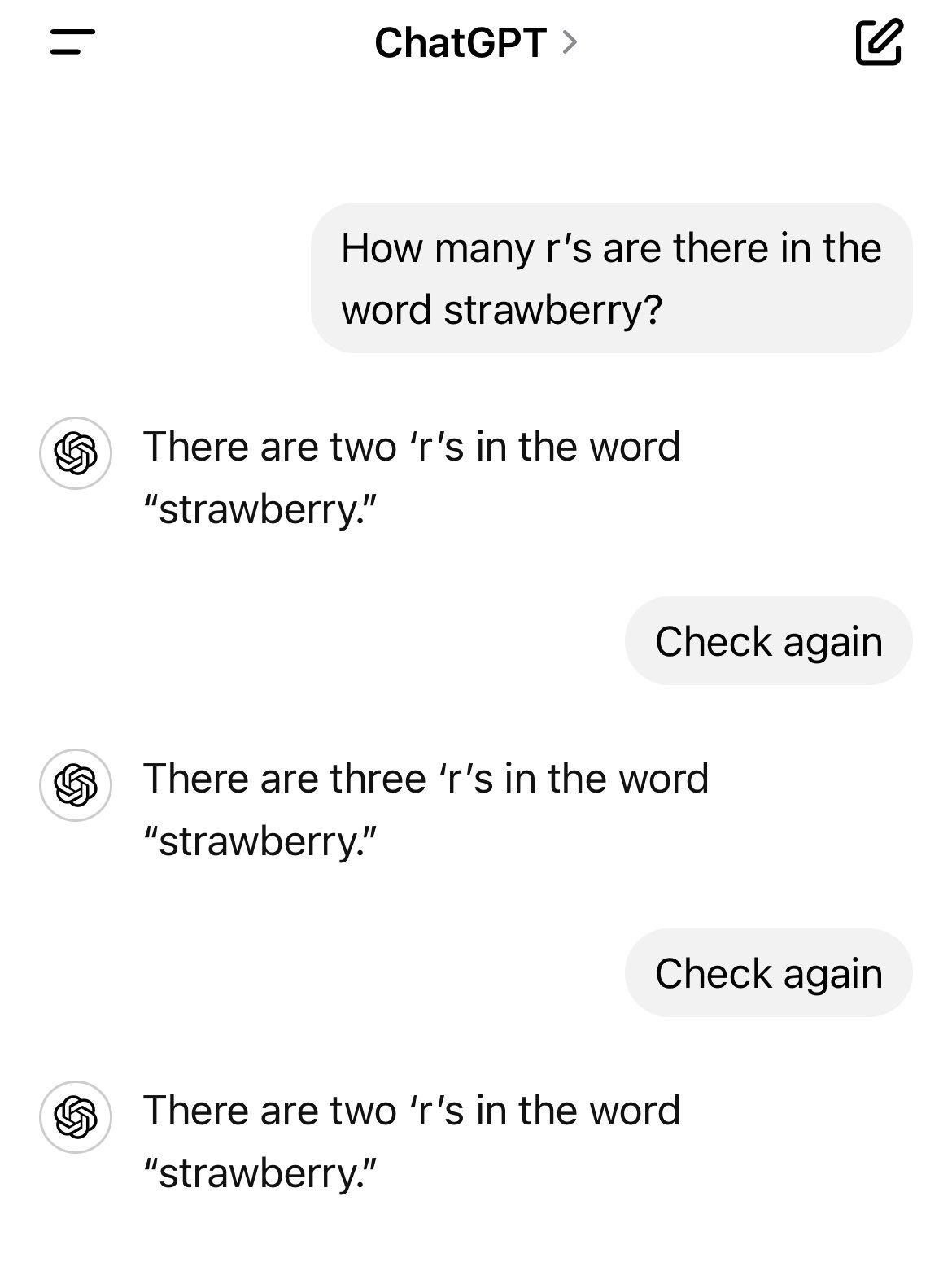

These generative AI tools learn by scanning enormous data sets, including large chunks of the internet, which they analyze for patterns and connections. The AI then uses the resulting insights to answer questions or carry on conversations with users. Often the results are magical; the system can tell jokes, write poetry, give you history or physics lessons, and help you plan your next holiday. But it can also deliver dubious output like this:

Just like the fictional Rain Man, generative AI performs challenging tasks brilliantly, while completely failing at more mundane requests. Because generative AI never learns to think for itself, and is only able to draw on data it has previously downloaded and scanned, the algorithm is lost when it encounters a question that doesn’t appear in its data set.

Instead of admitting its limitations, the AI will often take a guess without letting the user know that it is taking a guess, even to the point of inventing facts that don’t correspond to the real world. These ‘hallucinations’ are amusing when the question is how many r’s appear in the word ‘strawberry’, but less amusing when the topic is more serious and consequential. Just wait until a hallucinating AI chatbot screws up your research project, gives you misleading health advice, or sends incorrect information to one of your customers.

The good news is that the makers of generative AI software are well aware of these issues, and are working hard to minimize them. Moreover, even if the chatbots themselves aren’t aware of their limitations, we are; and we would be wise to keep them in mind when interacting with generative AI.

Indeed, the more we know about these algorithms, the better we can protect ourselves from their mistakes. So let’s take a closer look at how generative AI can go wrong — and how to get reliable information from it anyway.

AI hallucinations and why they appear

Common types of AI hallucinations include the regurgitation of misleading information, or satire, that the AI had picked up in its earlier scans of internet content and taken at face value. That’s how Google’s AI decided that people should put glue on their pizza.

AI also tends to think that every question must have an answer. That’s why, when ChatGPT was asked the nonsensical question, “When was the Golden Gate Bridge transported for the second time across Egypt?”, the chatbot responded, “The Golden Gate Bridge was transported for the second time across Egypt in October of 2016.”

Chatbots have also been known to back up questionable claims with nonexistent references. For example, they may cite as source material a specific book or journal article — including the author and page number — that simply does not exist. It’s probable that the AI, while scanning the internet, picked up formats of bibliographic citations without verifying their authenticity. Consequently, it may reference books or articles—including authors and page numbers—that do not exist in reality. This error occurs because the AI, unable to access offline sources directly, mistakenly assumes all cited formats it learns from online content correspond to genuine, published works.

How to avoid common AI hallucinations

Each iteration of generative AI is getting better at handling new kinds of requests — even ridiculous ones. But you can also minimize the chance of error by:

- Typing clear and concise prompts

- Adding the necessary context

- Giving step-by-step instructions

- Defining the type of output you wish to see

- Providing relevant rules and limitations for the AI to follow (e.g. word count, level of formality, specific areas of focus)

You can also assign a specific task to the AI. Instead of asking it to write an entire story or article, for example, you might ask it to write the introduction only. By making each request more specific and less open-ended, you are more likely to get what you want — while tightening the leash on the AI to prevent it from wandering off in strange directions.

A guide, not an authority

Over time, generative AI will surely improve its accuracy as hallucinations become rare. For the foreseeable future, however, it is worth independently verifying any significant factual information that a chatbot produces. Ask for its sources, and then check yourself to see if the AI’s claims are indeed reliable.

Better yet, if you’re using generative AI as a writing assistant, do the original research yourself at the beginning of the entire process. Then paste your findings into the program and tell the chatbot to turn them into clean paragraphs. Advanced knowledge comes from learning how to ask the right questions, and following these tips will give you an excellent head start in the world of AI.

When using any tool, you should always play to its strengths, not its weaknesses. By making clear and focused requests, then double-checking the factual output that your AI software produces, you’ll get all the benefits of this wonderful new technology, while dodging the hallucinations it occasionally produces.