We all learn by identifying patterns in the world around us. Language follows rules of grammar, nice feelings come from eating tasty food, and so on. People who are slow at detecting complex patterns may have difficulty learning at school, and people whose brains interpret meaningful patterns out of disconnected data points are often labeled conspiracy theorists.

Most of the time, common sense serves us well as a regulating influence, so that we aren’t carried away by absurd thoughts and ideas. But common sense is very hard to program into an algorithm, and computers often have a hard time telling good ideas from bad ones — such as when Google’s AI assistant started advising people to eat rocks.

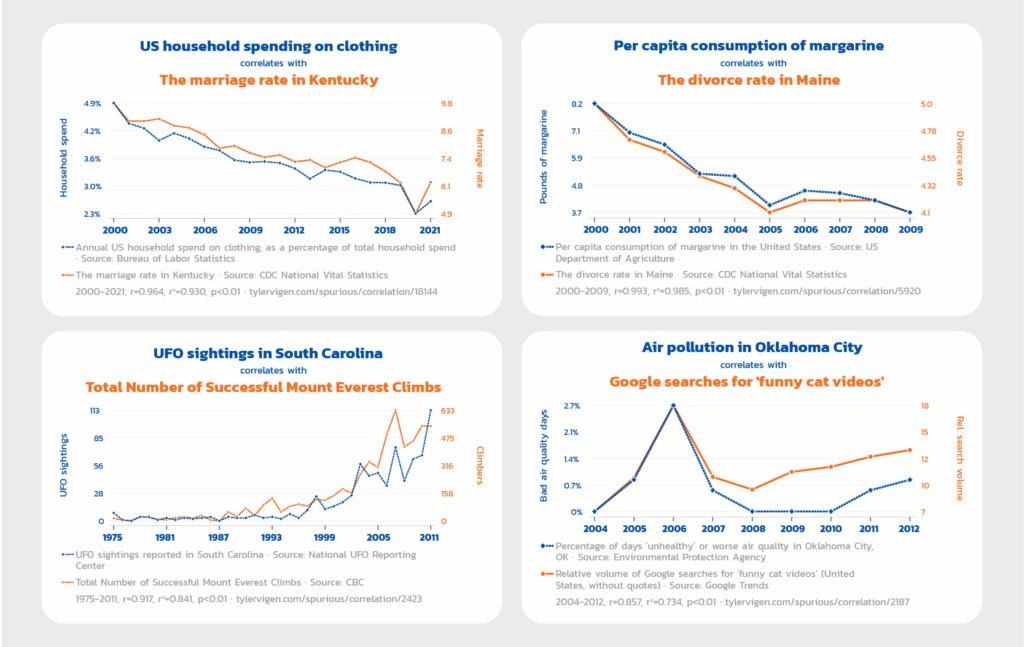

Indeed, there are all kinds of traps that pattern-detection software can fall into. How, for example, would a computer know to disregard the apparent connections we see in the graphs below?

Adapted from Spurious correlations, by Tyler Vigen, licenced under CC BY 4.0

Less amusing is when AI makes pattern identification mistakes that have real consequences for people’s lives. This can happen for a number of reasons, which are not limited to poor pattern recognition by the software.

It’s all about the data

Programmers have their own sets of biases, which they may or may not be conscious of, which are then transferred into the software they create. There is also the issue of unrepresentative data collection; computers may only receive information from the parts of the world that are digitally active, for example, leading to unbalanced conclusions about people and how they act.

AI software learns by analyzing data sets, and if that data is more robust for one race, sex, religion, political persuasion, or geographic grouping rather than another, the quality of output from the AI may reflect that difference.

Real-world examples of these phenomena include facial recognition software which sometimes has an easier time identifying light-skinned people than those with darker complexions. In healthcare, diagnosis algorithms have likewise achieved higher accuracy rates on white rather than nonwhite patients.

Google’s online job advertising system was found to be displaying high-paying jobs to men more often than women. Amazon’s algorithm had delivered similar results, albeit indirectly; it was favoring applicants who used words like ‘executed’ or ‘captured’ on their resumes — a stylistic choice embraced by more men than women. And when Midjourney, the AI art generator, was asked to show images of older people in specialized professions, its algorithm always showed older men.

Despite the best efforts of software companies to promote fairness and equality in their AI products, the self-learning software they create has a frustrating tendency to stray from that path.

Truth vs aspiration

AI is still a very, very young technology — and as data sets grow, its quality of analysis for every demographic group is likely to converge. But there remains a philosophical problem at the heart of this software endeavor: What if AI holds up a mirror to society, and we don’t like what we see?

Although certain biases in AI may be accurate insofar as they reflect current statistical reality, they also reinforce that reality. If, for example, a young girl types ‘woman’ into an AI art generator, which in turn presents an image of a housewife, the child may come to link those two concepts in her mind. She may then, if only subtly, begin to think less of her own future career prospects, and instead limit her imagination to the home.

Alternatively, if the AI is trained to ignore real-world probabilities and instead present visions of perfect equity, showing women as construction workers and auto mechanics as often as it shows them to be nurses and therapists, then it loses its ability to serve as a mirror for society, and becomes even more prone to the biases (or values) of the company and the programmers who design it.

There is no ideal answer, and every setting has its tradeoffs.

Where do we go from here?

Generative AI is a tool, even if it is a very powerful and specialized one. Different people may have different uses for it, and so each user should be left to decide how accurate, or how utopian, they want its output to be. The AI may present disclaimers and content warnings, or suggest different truth settings in some cases, but previous experiments with forced AI utopianism have been universally rejected by users who felt such efforts were substantively useless and stylistically condescending.